Bayesian-Assisted Inference from Visualized Data

Yea-Seul Kim, Paula Kayongo, Madeleine Grunde-McLaughlin and Jessica Hullman

IEEE Trans. Visualization & Comp. Graphics (Proc. INFOVIS) 2020

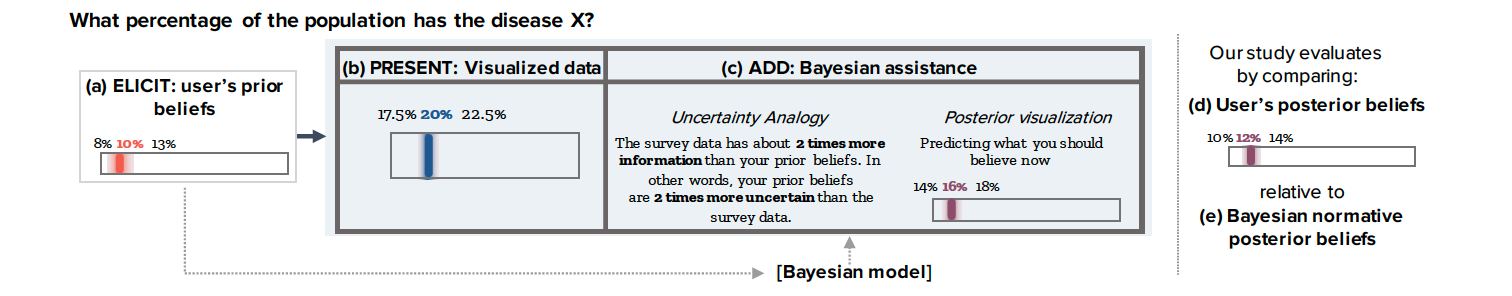

Uncertainty visualization designs evaluated in our experiment.

Abstract

A Bayesian view of data interpretation suggests that a visualization user should update their existing beliefs about a parameter’s value in accordance with the amount of information about the parameter value captured by the new observations. Extending recent work applying Bayesian models to understand and evaluate belief updating from visualizations, we show how the predictions of Bayesian inference can be used to guide more rational belief updating. We design a Bayesian inference-assisted uncertainty analogy that numerically relates uncertainty in observed data to the user’s subjective uncertainty, and a posterior visualization that prescribes how a user should update their beliefs given their prior beliefs and the observed data. In a pre-registered experiment on 4,800 people, we find that when a newly observed data sample is relatively small (N=158), both techniques reliably improve people’s Bayesian updating on average compared to the current best practice of visualizing uncertainty in the observed data. For large data samples (N=5208), where people’s updated beliefs tend to deviate more strongly from the prescriptions of a Bayesian model, we find evidence that the effectiveness of the two forms of Bayesian assistance may depend on people’s proclivity toward trusting the source of the data. We discuss how our results provide insight into individual processes of belief updating and subjective uncertainty, and how understanding these aspects of interpretation paves the way for more sophisticated interactive visualizations for analysis and communication.