Evaluating the Utility of Conformal Prediction Sets for AI-Advised Image Labeling

Dongping Zhang, Angelos Chatzimparmpas, Negar Kamali, Jessica Hullman

ACM Human Factors in Computing Systems (CHI) 2024 | ![]() BEST PAPER HONORABLE MENTION

BEST PAPER HONORABLE MENTION

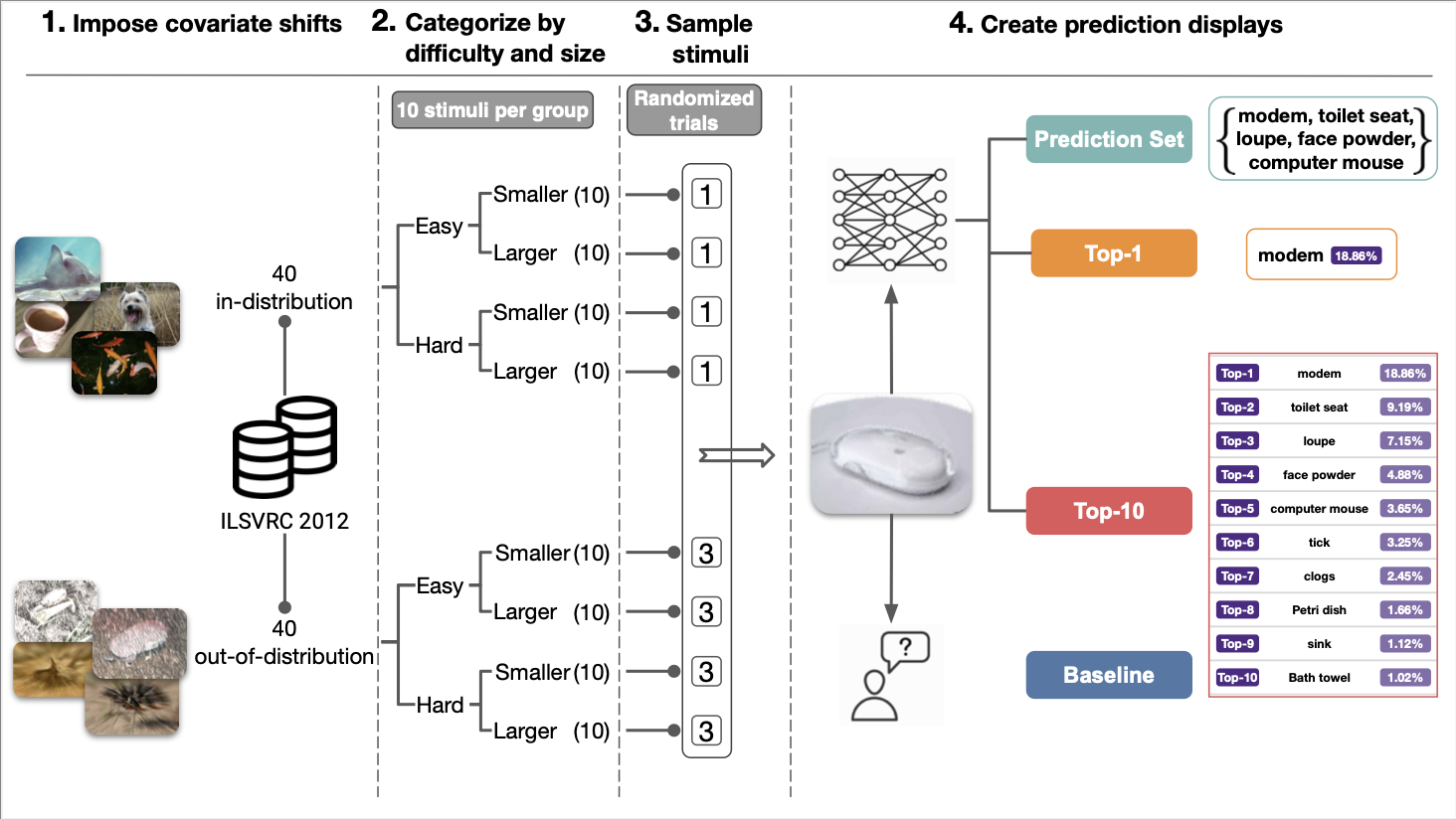

Overview diagram of our key experimental manipulations: (1) Five different covariate shifts are imposed through synthetic image corruption to create five replications of the conformal hold-out set, each containing images that are OOD. (2) Images in each conformal hold-out set are categorized by the classifier's prediction confidence for difficulty and the size of the derived set. Ten task images representative of the categories used to define each group are selected. (3) Participants label 16 task images sampled from 80 candidate images: four in-distribution and 12 OOD, balanced by difficulty and set size, presented in randomized order. (4) Based on the conditions assigned, participants may complete labeling tasks without predictions (i.e., baseline) or with access to prediction displays that vary in the content provided by uncertainty quantification (i.e., Top-1, Top-10, or prediction set).

Abstract

As deep neural networks are more commonly deployed in high-stakes domains, their black-box nature makes uncertainty quantification challenging. We investigate the effects of presenting conformal prediction sets—a distribution-free class of methods for generating prediction sets with specified coverage—to express uncertainty in AI-advised decision-making. Through a large online experiment, we compare the utility of conformal prediction sets to displays of Top-1 and Top-k predictions for AI-advised image labeling. In a pre-registered analysis, we find that the utility of prediction sets for accuracy varies with the difficulty of the task: while they result in accuracy on par with or less than Top-1 and Top-k displays for easy images, prediction sets excel at assisting humans in labeling out-of-distribution (OOD) images, especially when the set size is small. Our results empirically pinpoint practical challenges of conformal prediction sets and provide implications on how to incorporate them for real-world decision-making.