Odds and Insights: Decision Quality in Visual Analytics Under Uncertainty

Abhraneel Sarma, Xiaoying Pu, Yuan Cui, Eli T Brown, Michael Correll and Matthew Kay

ACM Human Factors in Computing Systems (CHI) 2024 | ![]() BEST PAPER HONORABLE MENTION

BEST PAPER HONORABLE MENTION

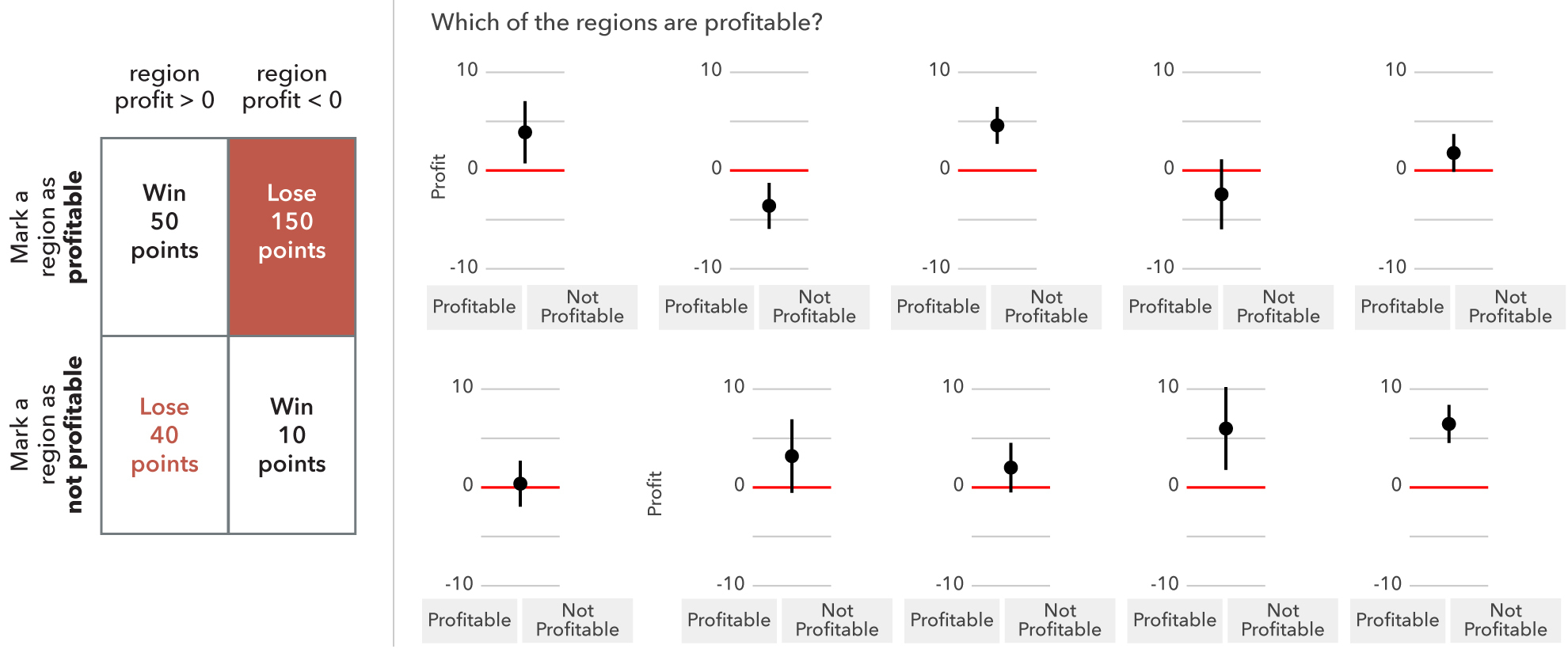

A task to test for multiple comparisons: we asked participants to perform an incentivized task in an exploratory data analysis (EDA) scenario which involved making multiple comparisons. Such tasks require participants to account for the multiple comparisons problem, and be more conservative in their decision making in order to maximize the payout based on the incentives.

Abstract

Recent studies have shown that users of visual analytics tools can have difficulty distinguishing robust findings in the data from statistical noise, but the true extent of this problem is likely dependent on both the incentive structure motivating their decisions, and the ways that uncertainty and variability are (or are not) represented in visualisations. In this work, we perform a crowd-sourced study measuring decision-making quality in visual analytics, testing both an explicit structure of incentives designed to reward cautious decision-making as well as a variety of designs for communicating uncertainty. We find that, while participants are unable to perfectly control for false discoveries as well as idealised statistical models such as the Benjamini-Hochberg, certain forms of uncertainty visualisations can improve the quality of participants’ decisions and lead to fewer false discoveries than not correcting for multiple comparisons. We conclude with a call for researchers to further explore visual analytics decision quality under different decision-making contexts, and for designers to directly present uncertainty and reliability information to users of visual analytics tools. This paper and the associated analysis materials are available at: https://osf.io/xtsfz/