Researcher-Centered Design of Statistics: Why Bayesian Statistics Better Fit the Culture and Incentives of HCI

Matthew Kay, Gregory Nelson, and Eric Hekler

CHI 2016 | ![]() BEST PAPER HONORABLE MENTION

BEST PAPER HONORABLE MENTION

Abstract

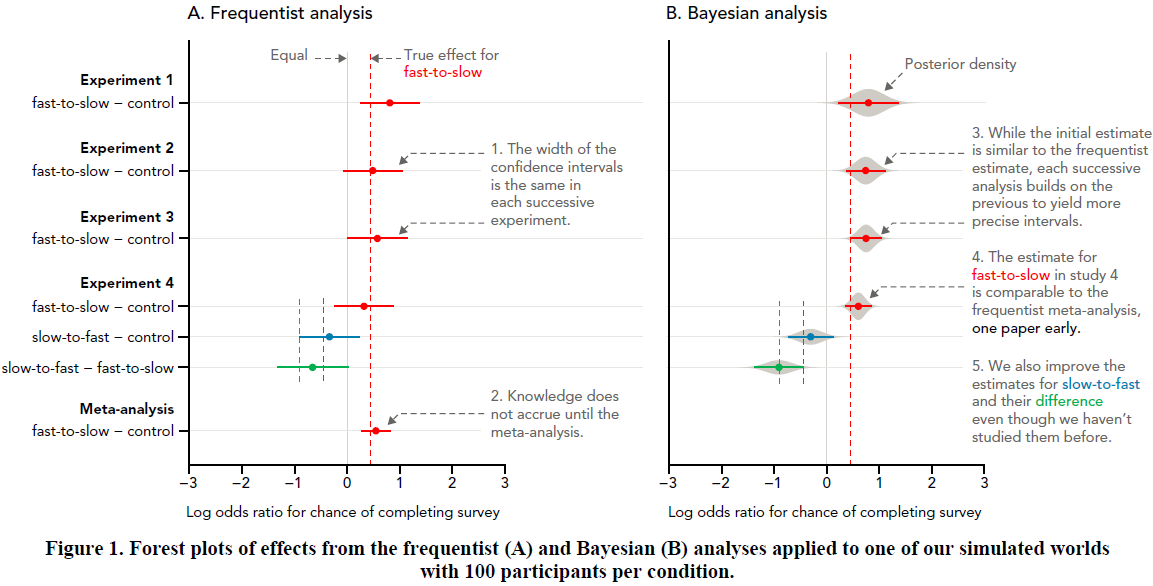

A core tradition of HCI lies in the experimental evaluation of the effects of techniques and interfaces to determine if they are useful for achieving their purpose. However, our individual analyses tend to stand alone, and study results rarely accrue in more precise estimates via meta-analysis: in a literature search, we found only 56 meta-analyses in HCI in the ACM Digital Library, 3 of which were published at CHI (often called the top HCI venue). Yet meta-analysis is the gold standard for demonstrating robust quantitative knowledge. We treat this as a user-centered design problem: the failure to accrue quantitative knowledge is not the users’ (i.e. researchers’) failure, but a failure to consider those users’ needs when designing statistical practice. Using simulation, we compare hypothetical publication worlds following existing frequentist against Bayesian practice. We show that Bayesian analysis yields more precise effects with each new study, facilitating knowledge accrual without traditional meta-analyses. Bayesian practices also allow more principled conclusions from small-n studies of novel techniques. These advantages make Bayesian practices a likely better fit for the culture and incentives of the field. Instead of admonishing ourselves to spend resources on larger studies, we propose using tools that more appropriately analyze small studies and encourage knowledge accrual from one study to the next. We also believe Bayesian methods can be adopted from the bottom up without the need for new incentives for replication or meta-analysis. These techniques offer the potential for a more user- (i.e. researcher-) centered approach to statistical analysis in HCI.