AVEC: An Assessment of Visual Encoding Ability in Visualization Construction

Lily W. Ge, Yuan Cui, and Matthew Kay

ACM Human Factors in Computing Systems (CHI) 2025

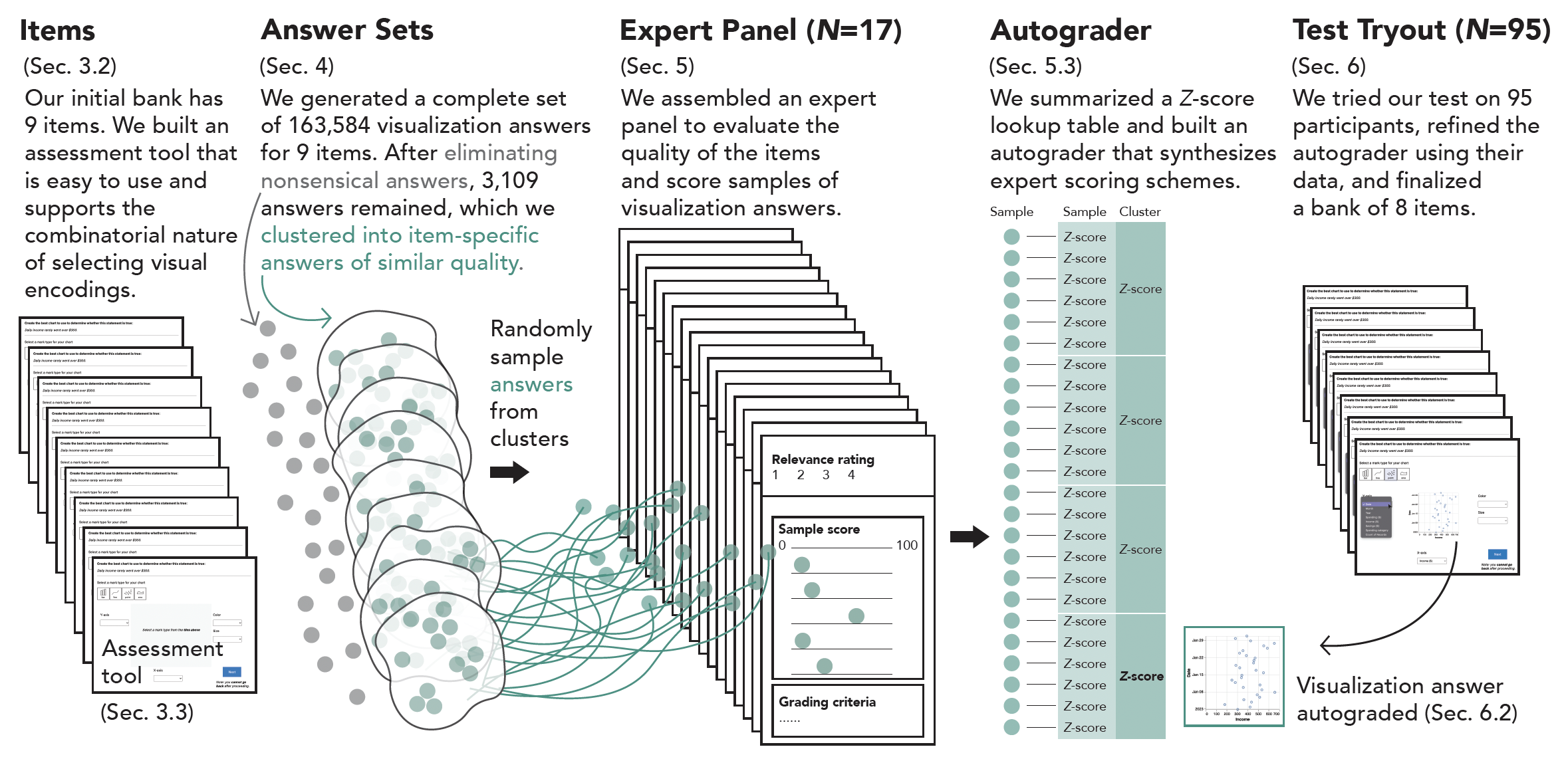

The systematic development process of AVEC. We created a design space to generate a bank of 9 items (Section 3.2) and built an assessment tool that is easy to use and supports the combinatorial nature of selecting appropriate visual encodings (Section 3.3). From a complete set of possible visualization answers per item (Section 4), we clustered visualization answers of similar quality after first eliminating poor quality answers. We assembled an expert panel to rate the quality of the items and samples from the clusters of remaining visualization answers (Section 5). We then built an autograder that synthesizes expert scoring schemes using expert ratings and data from test tryout (Section 5.3 and Section 6.2), which can automatically assign scores to visualization answers.

Abstract

Visualization literacy is the ability to both interpret and construct visualizations. Yet existing assessments focus solely on visualization interpretation. A lack of construction-related measurements hinders efforts in understanding and improving literacy in visualizations. We design and develop AVEC, an assessment of a person's visual encoding ability—a core component of the larger process of visualization construction—by: (1) creating an initial item bank using a design space of visualization tasks and chart types, (2) designing an assessment tool to support the combinatorial nature of selecting appropriate visual encodings, (3) building an autograder from expert scores of answers to our items, and (4) refining and validating the item bank and autograder through an analysis of test tryout data with 95 participants and feedback from the expert panel. We discuss recommendations for using AVEC, potential alternative scoring strategies, and the challenges in assessing higher-level visualization skills using constructed-response tests. Supplemental materials are available at https://osf.io/hg7kx/.