Subjective Probability Correction for Uncertainty Representations

Fumeng Yang, Maryam Hedayati, Matthew Kay

ACM Human Factors in Computing Systems (CHI) 2023 | ![]() BEST PAPER HONORABLE MENTION

BEST PAPER HONORABLE MENTION

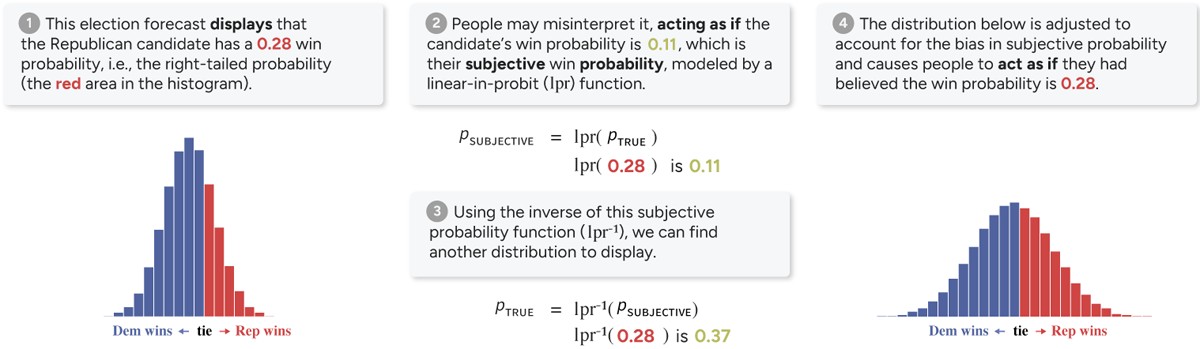

The concept of subjective probability correction: In this exemplar election forecast, the right-tailed probability represents the Republican candidate’s win probability. (1) When viewing a win probability of 0.28, (2) people may misinterpret it and act as if the candidate has a 0.11 probability of winning. (3) To compensate for this bias in decision-making, we can use the inverse of the subjective probability function, which allows us to start with the desired probability, say 0.28, and fnd another distribution to display. (4) The resulting bias-corrected distribution causes people to act as if their subjective probability of that candidate winning is the desired 0.28, while actually displaying a win probability of 0.37.

Abstract

We propose a new approach to uncertainty communication: we keep the uncertainty representation fixed, but adjust the distribution displayed to compensate for biases in people’s subjective probability in decision-making. To do so, we adopt a linear-in-probit model of subjective probability and derive two corrections to a Normal distribution based on the model’s intercept and slope: one correcting all right-tailed probabilities, and the other preserving the mode and one focal probability. We then conduct two experiments on U.S. demographically-representative samples. We show participants hypothetical U.S. Senate election forecasts as text or a histogram and elicit their subjective probabilities using a betting task. The first experiment estimates the linear-in-probit intercepts and slopes, and confirms the biases in participants’ subjective probabilities. The second, preregistered follow-up shows participants the bias-corrected forecast distributions. We find the corrections substantially improve participants’ decision quality by reducing the integrated absolute error of their subjective probabilities compared to the true probabilities. These corrections can be generalized to any univariate probability or confidence distribution, giving them broad applicability.